I was at my colleague’s housewarming party not so long time ago. After some sauna and refreshments the discussion turned to — surprise, surprise — programming. One of the guests opined that understanding data is all there is to programming. I nodded my head. After pondering about it for a while, I came up with this blog. Enjoy!

Data is king

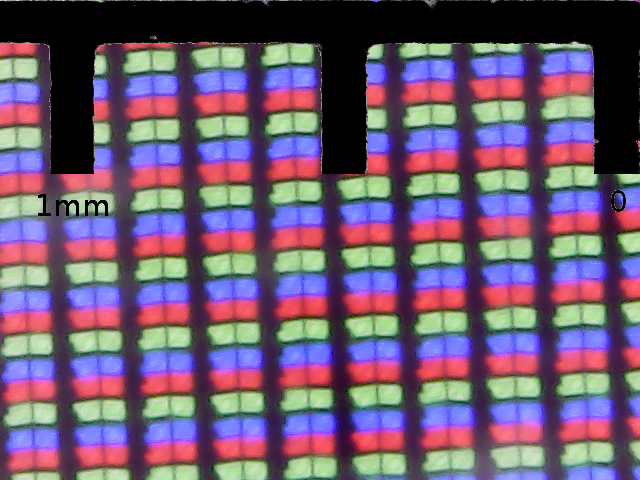

Although I could describe my work by saying that I write code, the end result does not somehow really even matter compared to the data the code processes. There is a fundamental duality between code and data. Code is either correct or incorrect — black and white so to speak. Data on the other hand is very colorful and often beautiful. Sometimes quite literally. When seen as data, an image is nothing else than a collection of intensity values for the red, green and blue diodes for a display.

Data is also in some sense something that is real, while code is invisible and something you can forget about — as long as it works correctly. Besides, the data in my line of work is of immense value. You see, in banking “cash is king” and data is basically money.

I cannot give you a formal proof of correctness of anything I’ve written. The code seems to be correct to the extent that I do not worry about it at night. Sometimes code reaches 100% correctness when all its bugs are declared to be features. But other than that, how does one write a piece of code that works well?

A good starting point is to understand that you, the developer, are a conduit between the real and the digital world not unlike the shamans of the past. It is your understanding of the digital world that determines how well the software is going to work in real life and vice versa. The way in which this understanding manifests itself has a lot do with data. The only practical way to verify the correctness of a complex program is to examine the data flowing in it.

It’s an evergreen wisdom in software development that you should “fold knowledge into data, so programming logic can be stupid and robust” as described by the legendary Eric S. Raymond in The Art of Unix Programming. The more I think about it, the more I like this statement and the more multifaceted it feels. I decided to pick three aspects for this blog post: first is the level of domain-driven design, the second is the ease of writing code, and the last point is how to put the pieces together.

Domain-driven design

The standard way to design enterprise software is to have it model something in the real world by using data objects that encapsulate terms and ideas from the business domain. The idea is to produce a system that is a reflection of its business requirements. While domain-driven design (DDD) continues to be a sound way to approach enterprise software, the way in which software is actually produced has started to change — hopefully for the better.

As digitalization has seeped deeper into our lives and the first generation of “smartphone native” people enter the workforce, the software we produce does not just merely model reality, it is increasingly an integral part of it. When a child born in the 2010s was shown a floppy disk and asked what it is, their answer was: “Somebody made a toy of the save button.” There will become a point when nobody will understand the anecdote. The notion of a floppy disk will be forever fossilized as a symbol for saving.

When there is then nothing to model, the business requirements must be expressed in some other way. In particular, separating the developers from the business end and having them imitate it is more and more a losing proposition and my first guess at why so many software projects keep on failing.

Instead, I see that winning businesses embrace the shamanic qualities of the developer. The developers are supposed to create and shape the digital world, not model some obsolete process of past millennia. I understand that this is a tall order, and I haven’t yet seen a job ad that would state “creation of reality” as one of the duties. But then again, I suppose this is the evil machination at the core of Mark Zuckerberg’s Metaverse.

Be it as it may, the target for writing a good piece of software remains. It should exploit and reflect the data structures of its domain, and the extent that the software developers truly understand the name of the game is of crucial importance. What does this mean in practice?

A repeating theme in software development is that we humans can really solve a problem only if it’s of the “divide and conquer” nature. We may dabble with other classes of problems, but so far there has not been a “cure for cancer.” If a problem can be divided into sub-problems, the sub-problems can be solved not only one at a time but also collaboratively by teams of people. The sub-problems work the same way. If they can be decomposed to their constituents, the process continues. Think about the TCP/IP stack for example. It has this structure. At the leaf level the “solutions” are about data that instructs the computer to do interesting side effects. What else can a computer really do?

While it’s important to have the model objects right, this only gets you so far. The big wins of understanding the domain is that you get to delineate the first line of division to sub-problems you are solving. The definitive textbook on the topic is by Eric Evans and goes by the name Domain-Driven Design: Tackling Complexity in the Heart of Software published in 2003. Truth to be told, I have not read it, and from what I have gathered, the book is a long-winded affair.

However, I know that the book defines the term bounded context which people say is the most important concept in it. It is easy to describe what it means. A bounded context represents some kind of an independent subsystem of a bigger system. Figuring out what the bounded contexts are in practice is another matter.

The discovery of a bounded context is best recognized as an emergent process and requires a high degree of trust between developers, architects, business people and domain experts. To pick just one of the bones of contention: from the business point of view, quick entry to market is a matter of life and death and the middle part of the software life cycle is a worry for another day. Experienced software developers know the business is heading to a tar pit, because the cost of change rises exponentially with time. They know that code starts to solidify and getting the fundamentals right can be attempted only once. The fear is that what should have been the equivalent of David of Michelangelo ends up having its most masculine subsystem protrude from the forehead.

It takes an extraordinary team of people to resolve the conflicts. The best way seems to do what they have done in the emerging whiskey scene of Finland. One can sell gin while the whiskey is in the barrels.

Finally, bounded contexts are not a silver bullet. It may be impossible to define them if the business itself is still emerging. For this reason I think it is almost impossible to write code of the highest quality in a startup context. Also, many large businesses seem to be completely fine with a monolithic approach to software design. A good example is StackOverflow.com. The key is to not approach software design mindlessly and understand that a domain-driven design pays big dividends if implemented correctly. Dividing software components willy-nilly leads to the same outcome as blindly imitating a business without a thorough analysis of its boundaries. The outcome can be a mess that no amount of code formatting can fix.

When code starts to whisper

When I code something non-trivial, there always comes a distinct moment where all the pieces, code and data, sort of snap together and the damn thing compiles. A mysterious force akin to magnetism takes over, aligning and directing the flow of data. It is as though no computation actually ever happens. Whenever there’s a question, there’s an answer that can be constructed from the data at hand. An electrical engineer would perhaps say that the circuit closes. A psychologist would say that my mind is in the flow state.

It is a highly satisfying experience, and it is joys like this that makes programming uniquely rewarding. Data is ultimately made of bits, ones and zeros. Like an ant, each bit is individually meaningless and miniscule. Like a child marvelling at an ant colony, I am delighted to see the data in my program flow effortlessly and produce the expected output. I do not frankly understand why so few people become software developers. It is like you get to work for your internal child instead of some parent called the boss.

It pays to emphasize that nothing good comes from negative thinking. Programming well requires a lot of effort but I suppose the trick is to not think about it that way. I think that programming should be effortless. I have also observed that I have a tendency to remove myself from the picture. It is not me writing the code; the code writes itself. It whispers to me. Crazy, I know.

The act of programming well has a lot to do with visualizing code and forming hypotheses. I’ve understood visualization is also a core practice in other disciplines. It is pleasant to actually see on the screen something that existed in your mind in the morning. Similarly, it feels good to validate your hypothesis in the affirmative. Being proven wrong in the programming world is not that unpleasant either. You learn something new that makes your next hypothesis that much better.

It seems to me that I leverage my experience by ignoring a lot of the unnecessary details that I know are getting in the way. Too much information wrecks productivity. Sure enough I have difficulties to focus every now and then, but I have also taken drastic countermeasures to not be distracted. For example, I have gotten to the point where I do not use (much of) syntax highlighting in my editor anymore. Colors hurt my eyes. Code should be black and white and the colors that I see are the traffic lights of the test suite.

In short, programming well requires that you are in the zone. Rather than being overwhelmed by external stimuli, your mind takes in only that part that is relevant for you to have an internal dialogue of what should done and then doing just that, in your terms and at the pace that suits you. Caveat: you will lose track of time.

Composition

Got your mind in the right place and a solid understanding of a bounded context for your next microservice. Great! It is almost done except for actually programming it. I want to describe how I go about it in a bit more detail and how the “snapping effect” comes about. What follows is basically a speedrun of Clean Architecture by Robert C. Martin but with my idiosyncrasies thrown in.

The starting point is to have the domain objects fleshed out. These are your

User, Pet, PetCategory and so forth. An object-oriented programming

textbook would then instruct to start thinking about what kind of messages these

objects could send to each other. However, as anybody that has worked with big

systems knows, this is a dead end, precisely because we want to verify the

correctness of the system by examining the data flowing in it. To do that, the

domain objects need to be serializable (to JSON). Data does not talk, it flows.

That is why we typically end up with the so-called anemic domain model in which you only have a redundant layer or getter methods to access data. An immutable record sounds much better so let’s do some rebranding — it is basically the same thing but remember that nothing good comes from negative thinking.

In addition to having readable names, the value of domain objects comes from the

ability to control how they are constructed. The idea is that the construction

of a domain object is kind of a gate between potentially dangerous data from the

external world to the sanitized internal world of the application. The contract

is that whenever there’s an instance of a Pet, it is unnecessary to null-check

its fields anymore. You do not constantly worry if Fluffy has its tail missing,

do you?

In practice, I tend to declare the constructor private and have it only bind values to the fields of the data structure, following the guideline “constructors do no work.” It is then the job of static factory methods to describe all the supported ways in which the object can be constructed. Standard best practices apply: you can remove runtime assertions by leveraging static typing and benefit from the flexibility of polymorphism by depending on interfaces, not concrete implementations.

The story of the domain object is therefore not what kind of messages it can receive and send. It is the story of how it can be constructed. The story has a second chapter which tells what kind of relations the object has. In practices, the predicate function over the domain object are best implemented as methods of domain object. Coincidentally, these are precisely the ingredients that define a 1st order logic. You have objects and their relations.

Taking a view on all the data, the process of creating the domain objects

divides it into sets of ‘good’ and ‘bad’ data. It then follows that functions

operating on the domain objects can rely on sort of an implicit certificate. The

inputs were OK upon invocation. In contrast, if there’s a function with a string

argument as so often happens, one should ask if the parameter should be named

attackerControlled. See for yourself in your codebase how deep to the system

raw user input can travel.

Settling on interfaces that only depend on the domain objects directs the codebase into two directions. It necessitates some kind of a cleansing layer to be implemented. When input from the user comes in, it is validated by feeding it to the factory methods. That would be the controller layer or anything of that nature. I think of it as the ‘protective shell’. The other direction is to think about how the internals of system can be designed to be stable over time.

In software development, reusability is basically the same thing as

composability. The way I have approached this so far has been through an ad

hoc analysis of all the ways the domain objects snap together to produce some

more interesting results. This then gives you functions like

Bill sell(User buyer, Pet pet), Invoice invoice(Bill bill) where perhaps an

invoice object can be converted to a PDF document. I remember once working for

months with a project to discover that I had ultimately come up with a way to do

the most complicated ETL job of the business with a single line of code by

composing the right function calls together. Everything just snapped together. I

had just the right pieces.

Concluding remarks

I was proud of the ETL code. It ended up in the trash bin, though. While the end result was spanking clean, the problem with an ad hoc approach is that the end result is fragile when business requirements change. There will be an urge to sacrifice the interfaces and stick just one boolean or string argument somewhere, causing a painful ripple effect in the test suite that does not compile anymore. Often this is because it takes effort to understand the abstraction. The cleaner it looks, the more effectively it hides complexity that nonetheless exists. Meanwhile, many developers would just like to get the job done and forgo a week-long analysis of the source code.

I think it’s in this context where functional programming ideas come into play. Rather than having an ad hoc collection of service interfaces, why not ask the even more abstract question of how to compose data types in general? We understand the process of solving a problem in software development as a process of decomposition. It is then only natural to ask what are the ways to compose things back together.

One of the most recent developments in my programming career has been to get to know algebraic data types, as lectured by Dr. Bartosz Milewski among other FP wizards. I must admit though that I usually fall asleep while watching Milewski’s lectures, which is not an unhappy end result either.

Anybody who has spent a lot time with C++ template metaprogramming or TypeScript knows there is something more to data types than is generally understood in mainstream software development circles. I touched on this topic in my ReactJS/Redux blog.

Turns out that there are ways in which you can take types like User and Pet

and form meaningful types User + Pet and User * Pet. You can further go on

to write equations on data types and solve them. The mathematical structure of

these algebraic data types is a

semiring.

Dr Milewski’s claim is that this is the missing piece when designing reusable code. Once mastered, the composition rules are fully general and at disposal in every codebase. Think of them as some kind of design patterns from eternity. It is interesting to see how the story of algebraic data types unfolds in my career.

I would like to conclude by stating that programming well is about understanding data one way or the other. We do not generally write software for the sake of software. It is the revenue of a business that puts food on the table. Best programmers go out of their way and try to actively understand what is expected of the software. Then they encode that understanding to the data types of the software. Secondly, if you are going to be doing this for decades, it had better be fun. Finally, best programmers understand the process of decomposing and composing data both intuitively and at a theoretical level. Programming well is never about internalizing a fixed set of rules. You create the rules in the form of code. The data is then all that can be produced by running the code over and over again. If the code answers to all the questions posed to it, it is good code.