Would it be time to give maths a second chance?

Every now and then I come across social media campaigns and the like that aim to inspire non-techies to take on programming. While I praise their goal, these campaigns tend to have one thing in common that makes me cringe. They try to be inclusive at the cost perpetuating a misguided image of the role of maths in software development. The sentiment is that you do not need maths to become a software developer.

I do not spend my time at work looking for x. It is a rare occasion if I get

to divide two numbers — after null and NaN checks of course. Practically

none of the stuff I do at work requires any maths.

So I have to concur that you do not really need maths for programming, but my gripe is that the question is so loaded with prejudices that a simple no for an answer is not sufficient. And I bet the INTJ death stare accompanying my answer does not lighten the atmosphere either.

You see, I find maths absolutely crucial for programming well, and I doubt if people are really that bad at it in the end.

Not that you can flip some switch and suddenly become a math wizard, but I think the whole thing just goes to show how people’s perception of maths is based on unfortunate circumstances at school.

The problem is that we let education systems define what being good at maths means at a very delicate stage in our development. Children are even divided into groups by adults based on their performance. If you suck by the education system standards, you are singled out, and their policy will not be to pick you up. They will either dumb down the lectures, which you will notice, or give you more of the same. If you are somebody who finds sitting still difficult, too bad for you! You will carry the ‘I suck at maths’ label for the rest of your life.

I cannot give you a hero’s story in which I would have struggled with maths and finally, after much strife, would have excelled. I did not give the subject much of a thought before university, and those times I did, I found it fascinating. Then as an adult, I have gotten to the point where I can safely say that I am a happy dilettante with no real skills to actually tackle mathematical problems. I use camomile tea and YouTube math lectures as a natural substitute for Valium.

So, I can only imagine what it feels like to learn to doubt your maths skills and consequently learn to detest the subject, for this is the pattern I see. There’s the doubt and then there’s the hate.

It takes brains to do maths. Ours is a social one. Perhaps here lies the crux of the problem. The natural state of affairs is that kids learn social behavior in their peer group with adults being the caretakers who’ll accept you back once the day is done. When kids socialize, the rules of the play emerge spontaneously. Kids practice negotiating social situations through play.

Maths is different. Maths is abstract and its rules are not set by the peer group. The rules of maths are of the form “given X, then Y.” If you get stuck at X, you are asking for trouble. The whole point is that if X holds, then Y follows, and the proof is in the pudding. Never mind how X came to be.

Associating difficulties in maths with difficulties in abstract thinking is a no-brainer, but the more I think about it, refusal of the basic formula of a mathematical statement seems to be the one problem that rules them all.

First, there’s the agony of getting stuck in irrelevant details. I have great many examples of this from my own life, but I want to share an anecdote from the world of adult education recounted to me by my mother who is a teacher. Her maths teacher colleague was going over the geometrical properties of the circle and illustrated them on the blackboard. The problem was she drew the circle in a sloppy way. One of the adult students raised their hand and pointed this out. The teacher assured that this is not a problem — we can assume that the circle is drawn perfectly. “But why, I never thought of that!”, replied the student. “All my life I thought you had to draw shapes perfectly in order to study them!”

Another variation of the theme is difficulties accepting a proposition because of aversion of authority figures. I have met so many people in my life who claim they suck at maths because they were at odds with their maths teacher. I think this is a case where the person found it difficult to toggle between the maths and social contexts of their brain. If a child or a teenager tries to learn maths in the social mode, it will appear that it is the adult who “sets the rules” which is a conflict. “I do not accept X because it was you who proposed it.”

A third and final example that in my experience bites us adults the hardest is of the form ‘if not entertaining, then zero f*cks given.’ Kids and adults alike enjoy solving puzzles for a dopamine rush. Maths is also a treasure trove of interesting historical anecdotes that relate to technology, like the birth of geometry in ancient Egypt to deal with land disputes after the Nile flooded. The problem with puzzles and stories is that maths is not about them: it is about the connection between X and Y. To truly enjoy maths, you want to be rather high…in Maslow’s hierarchy of needs 😅. You want to study maths for its own sake.

So, let’s do a bit of a reset and let go of the grudges against the poor maths teacher who is senile by now anyway. And let’s not immediately assume there has to be some shiny prize at the end of doing a bit of maths. If you were to give maths a second chance, what should you know if you want to become a software developer?

I think a lot of it boils down to developing your intuition not just about the craft of designing data structures and algorithms, but the world of abstract things as a whole. It is nice to see where programming sits in the universe of geekiness. I could probably write the rest of my life on this topic, but let me just develop a couple of arguments with pointers to interesting stuff I have encountered on my math lecture adventures.

It is not so different from software design

Maths is made of a multitude of disciplines. Some of them are more mainstream, some are more esoteric. Every discipline comes with its own concepts and theory. If you want an overview of how the different mainstream areas mesh together, you may watch this piece of awesomeness: link.

In my experience, no mathematical result will help you directly to write a single line of code. And I have my doubts about every piece of software produced by academics. So you could just yell “YAGNI: You ain’t gonna need it.” But hold on, remember we should not look solely for utility? Let’s consider the good ol’ format ‘given X, then Y’ but with a twist. What happens if the X part gets more and more elaborate?

For example:

- Given a set G and operation · satisfying the group laws, the tuple (G,·) forms a group.

- An abelian group is one for which gi·gj=gj·gi for all group elements in G.

- Let F be a set. Given an additive abelian group (F,+) and multiplicative abelian group (F — {0}, ×), the tuple (F,+,×) forms a field.

While every statement on the list is true, I hope that they are gibberish to you. Naming things concisely and expressively is hard not only when it comes to code. But the other side of the coin is that sometimes certain concepts just have to be understood before you can make further progress. If you want to understand what a field is, you first need to understand what an abelian group is. And to understand abelian groups, you need to understand groups in general.

The classification and hierarchy of structures is what mathematics ends up being. We arrange the structures into levels of abstraction to see patterns that are otherwise lost in details. You can classify and arrange the pieces to see different patterns. It is ultimately up to you, as long as you are honest and stick to the Y follows from X format.

All of this has a major bearing to writing software well. It is not so much the mathematical results but the art of thinking systematically at different levels of abstraction.

It is for example important to understand the notion of referential transparency that states that you can search-and-replace, i.e. inline, the term group laws above with the actual equations that define a group. It is equally important to understand that doing so will introduce unnecessary details to the discussion. You pick the level of abstraction that is suitable at every particular context.

From software architect’s point of view, it is also important to understand that there are many ways to layer and organize a software system. Some serve a functional purpose while others a non-functional one. Some are present at (micro)service level, some at class method level. It is like looking at a map at different resolutions or turning the whole map upside down. Or maybe let’s not turn a map upside down, but you get the point.

Another aspect of mathematical theories relates to the way in which they emerge. You see, mathematics is discovered, not invented. This too has a great parallel with software development, for the only way to construct a complex piece of software is to discover its laws that often times relate directly to the physical world around us.

It is only by discovering the algorithms and data structures that you can distinguish between accidental and essential complexity, which is one of the core skills of a software developer. And when it comes to data in the real world, why would you reject mathematically battle tested structures over something you roll on your using magical strings?

And what do we do when things go awry? Treating a program like a mathematical proof, we rewind the argument to a point where we still understand what is going on. We literally pause the execution of a program with a debugger and follow its execution step by step until the bug is found. It is not so different from following a mathematical proof.

In programming, a little logic goes a long way

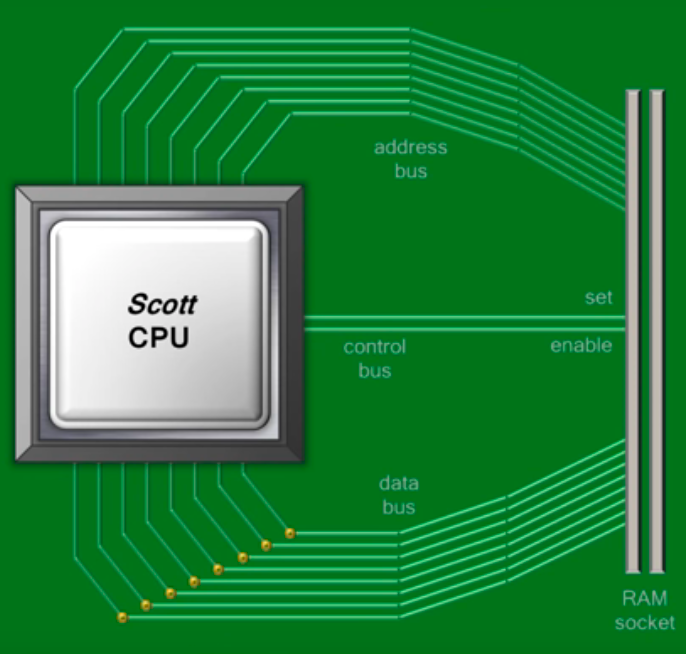

A computer is essentially a device that has two components called CPU and RAM. The job of the CPU is to write and read bit sequences to and from the RAM. The job of the RAM is to store those bit sequences. The stream of bit sequences from the RAM to the CPU is made of instructions and data. The instructions tell the CPU how the data is to be manipulated.

I bet if you are reading this blog post you are a tech-savvy person and knew this much. By inspecting the picture on the right, you can also infer that it’s depicting a computer with a 8-bit architecture. That is, a computer that manipulates data one byte at a time.

The actual computation inside the CPU happens practically instantaneously and it takes time to shuffle the bits around. And if we were to extend the discussion to hard drives and network, the timescales blow up big time. In reality, CPUs end up idling most of the time. It is a relatively rare occurrence for the CPU to do anything smart.

And once the CPU has a hold of a piece of data, what can it do with it? Take the derivative? Compute an integral? The technicality to understand is that the CPU reads data to so-called registers. For example, the registers might be 16-bit wide so that the above 8-bit machine would require two reads to fill it with bits. For the computer to be feature complete, it needs to be able to first read data to its register and then, after manipulating it, write the modified content back to memory. A 16-bit register has 216 inputs that map to 216 outputs. That’s it. In a way, the CPU does not know what it is doing beyond the instruction it is currently executing.

It is the job of computer programs to store instructions in the correct order to produce meaningful bit sequences in the memory. What is the role of maths here? What are the instructions to transform e.g. a 16-bit sequence to any other 16-bit sequence.

The question can be understood in two ways. We can talk either about the instruction set, the total number of instructions that the CPU understands, or the number of instructions to sequence to compute the desired outcome. If you think about this for a while, you will realize that this is a balancing act. The two approaches are a design with a handful of instructions that need to be sequenced or a lot of specialized instructions that get a particular bit transformation done in one go.

Taken to the extreme, one can actually realize a working computer with just one instruction: flipping bits one at a time. Sure, it will be retarded, but hey, no difficult maths was involved!

So, here’s an electrical engineer’s take on maths in programming. Would you like to have a program that takes one gigabyte of hard disk on your smartphone and takes an eternity to run or one that takes up say a megabyte or two and runs hella fast?

Introducing logic!

Thanks to logic, we can design instruction sets that work universally. We can frame all “computation” that the CPU can do in terms of a handful of binary operations and the bit flip, also know as logical negation. Take for example the logical operation called XOR, the exclusive or. Its truth table is on the right.

The XOR of 11111111 and 00000000 is computed bitwise and the answer is 11111111. Now you can for example clear a register to all zero bits by computing the XOR of its content with itself.

You can write down all the other possible truth tables by permuting the output bits: 24 in total. That would suggest that we would require 16 instructions on two operands and the negation instruction plus the instructions for reading and writing to memory. The reality is a bit more nuanced. For example, you may not want to use the logical operation of contradiction and it turns out that many of logical operations are actually redundant.

| p | q | p XOR q |

|---|---|---|

| 0 | 0 | 0 |

| 0 | 1 | 1 |

| 1 | 0 | 1 |

| 1 | 1 | 0 |

I hope you are convinced that kind of maths involved here is dead simple. You apply the same logical rules over and over again until the desired result has been achieved. Indeed, a little logic will go a long way.

There’s no escaping it

Let us finally take an intuitionistic stance to the topic. You cannot ultimately escape maths. No matter how much you dislike it, you still want to be understood by others, right? Turns out that programming and human languages are both instances of “first-order predicate calculus”. That’s one of those fancy abstract maths words, but it just means “the theory of logical languages of practical utility in the real world”. For you to fit into a social group, you have to make sense of yourself. Some exceptions to the rule apply to the social contract of infants, drunkards and Danish people.

And since the act of forming any sentence involves maths, you start to wonder if we can even perceive anything at all that would not have some mathematical structure behind it. I can only imagine the most primordial of things like pain. But then again, doing maths makes my brain hurt some times…

No matter how trivial, chaotic or random, mathematicians have tried to tackle it. Not that maths is a panacea. On the contrary, there are great many fields of maths that are so difficult that nobody has made any progress in over a century and consequently you can be as wise as the next door university professor just by understanding the problem statement.

The Collatz conjecture comes to my mind as a nice real world example. In general, buzzwords like ’nonlinearity’, ‘chaos’ and ‘curse of dimensionality’ are all related to phenomena that we as a species just do not grasp very well. But do not be fooled by randomness. Probability theory is one of most beautiful things I have ever seen and that, my friend, we have locked down.

Let’s flip the script. There are aspects of reality that we understand effortlessly, some require practice, and the nonlinear bits trip us every time. Maths requires effort and is able to describe complexity to the extent that we can make sense of it. You do not really need maths in programming, because in general, we would like to craft systems that operate effortlessly. What is perhaps sometimes extra, we would like the users of our systems to also interact with them effortlessly — drunk infant Danes included.

But you nonetheless need maths to understand what it means to be a human. Maths is the template language of human perception. It is the very language of cultural evolution with no allegiance to any culture in particular, making it resilient to human screw-ups like genocides.

Fragments of clay tablets showing how arithmetic was done millennia ago may be hidden in the sand but are perfectly understandable once restored, just like the shards of silicon that we will leave behind for future hominids to wrap their heads around.

References

This blog would not have been possible if it were not for the following courses/lectures that I sincerely recommend for everybody who’s is even mildly interested in the topics I addressed: