Memorizing the flags of the world is a good exercise for your brain. What kind of strategy would you use? I have noticed that grouping the flags by visual similarity helps me, which made me think about the topic from a programmer’s point of view. I ended up jacking code from the 1970’s which rekindled my long forgotten passion for numerical linear algebra and got me cursing the Taliban regime of Afghanistan for messing with my principal component analysis code.

Introduction

I like to play quizzes with my nephew. A particular favorite of ours is to pair the flags of the world to countries. I have noticed that the task gets a lot easier if you can tell a short story about each country in your head. The superb GeographyNow series on YouTube has proven to be mightily useful for this purpose despite the platform’s general demise to clickbait-ridden crap. As for my nephew, I suspect he just uses raw cognitive power and the flags served as a mere gateway drug to Pokémon.

I have long pondered about an observation I have made when playing the quiz on two competing sites, JetPunk and Sporcle. One version of the quiz has the flags loosely grouped by similarity while the other randomizes the list on each trial. At least for me, the first variant is significantly easier to play.

This observation is sure to have some solid neuroscience behind it; all I can remember for this blog post are the chapters on associative memory in Kahneman’s Thinking, Fast and Slow (2011). As I am not a brain surgeon nor a mind reader, I do not have much more to say about the functioning of the human brain. But since I am a programmer, I also find the following simpler question interesting: How would you order the flags of the world based on visual similarity programmatically?

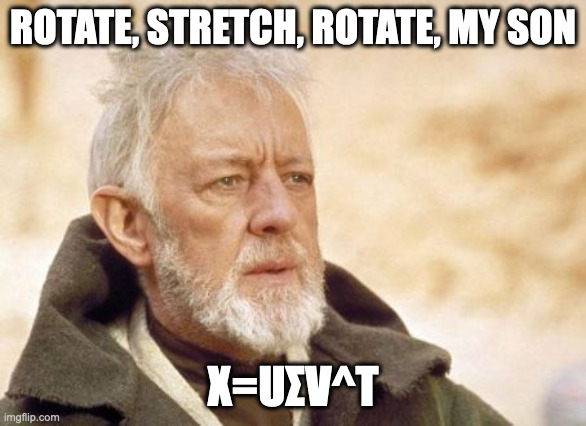

A faint voice whispered in my head: “Use Principal Component Analysis, son.” Indeed, I vaguely remember reading about this topic almost a decade ago at university but the references are just about garbage-collected from my main memory. I therefore decided that now is as good time as any to get my hands dirty and wrote this blog.

Principal component analysis

Linear algebra is a discipline taught at university to just about all students of the STEM subjects. It is concerned with vector spaces. Statistics is a trauma-inducing compulsory course for humanists. It is concerned with aggregates of data. Data science is the bastard son of the two, and Principal Component Analysis (PCA) is a technique born out of this unholy cerebral knowledge.

More technically, PCA takes any data matrix and outputs a list of orthogonal vectors with associated weight coefficients. The weight coefficients order the vectors in terms of how well they explain the variation within the data. More visually, imagine a cloud of data points in a multidimensional space. PCA is a technique for drawing coordinate axes in this space. The axes are ordered in such a way that fewer and fewer points aggregate along them if you imagine looking at the data along each axis in turn.

As imagining multidimensional data is a tall order, it is perhaps helpful to think about a two-dimensional example. The data will have two principal component axes. The first axis corresponds to a least squares fit to the data, while the second axis is orthogonal to the first one — in two dimensions there is only one choice up to sign.

I also like the analogy given by Josh Starmer on his StatQuest channel. Imagine that you are a chemist and you are given a bunch of cocktails. You are not allowed to drink them. Instead, you are asked to come up with a recipe for each cocktail (data point). A principal component analysis on the cocktails yields a list of the liquors (vectors) and their proportions (weight coefficients). You may then hypothesize that the first principal component of a cocktail is vodka with weight 40 ml × 0.916 g/ml = 36.64 g (🤓).

I should also mention that in practice PCA is equivalent to performing Singular Value Decomposition (SVD) on the data matrix. However, that is the extent I want to touch on the theory. It is futile for me to attempt any in-depth explanation. Firstly, SVD is typically the pinnacle of a course in linear algebra and its beauty and significance can only be appreciated once the foundational aspects of the discipline are mastered. Secondly, PCA and its connection to SVD has been widely discussed in several blogs in recent years as the technique is highly useful in all kinds of machine learning applications — this is very much the math that makes things like face recognition possible.

Suffice it to say that the legendary Gilbert Strang ranked SVD as the most elegant piece of linear algebra there is. His lectures are freely accessible from here.

The algorithm

I still have to say something about how PCA helps in the task at hand. I need to convince you that it’s possible to write a program that takes the flags of the world as input and spit them out in a such a way that flags resembling each other are grouped together. For example, one could argue that the flags of the Nordic countries should more or less follow each other: 🇩🇰, 🇫🇮, 🇮🇸, 🇳🇴, 🇸🇪.

There is plenty of room for creativity for this problem, but a relatively straightforward recipe is as follows:

- Construct a data matrix X out of the bitmaps of the country flags

- Perform SVD on the data matrix X=UΣVT

- Construct the PCA score matrix T=UΣ

- Truncate the score matrix to the first rank columns

- For each country n, output the country emoji and the nth row of T

- Sort the output based on the scores

The first step is to design the data matrix. The convention in PCA literature is to construct it so that each row corresponds to a repetition of an experiment while each column is a feature of the experiment such as a reading on some kind of a sensor. On the other hand, one can easily download images of the country flags from here. One then ends up with a bunch of PNG images in the downloads folder. So much for experiments and sensors.

This did not prevent me from experimentation. In the end I decided that each row of the data matrix will correspond to a country (experiment) and each column corresponds to a pixel value in a bitmap version of the PNG image (the features). As aside, I must admit that countries as experiments has a certain poetic air to it.

It may be confusing to think that flags that are inherently two-dimensional objects become the rows of another two-dimensional object, a matrix. However, as the bitmap images are read from the disk as contiguous chunks of bytes, they are row vectors from the point of view of this problem. It matters not what the exact bitmap data layout is as long as it is the same for all the flags.

I therefore chose the size of each bitmap to be exactly 128×85 pixels. This was more or less an arbitrary choice except for the fact that PCA is not the cheapest of procedures. In addition, since PCA is highly sensitive to outliers, I ruled out a handful of flags. I discarded Nepal (🇳🇵) because of its aspect ratio. Sorry Nepal. The rest of the outliers were territories with non-unique flags like Svalbard and Jan Mayen (🇸🇯) and the kingdoms of the UK as these do not have a two-letter country code. All in all, the set of unique flags with unique two-letter country codes comprised 244 elements in total.

At this point, we have constructed the data matrix X whose shape is (244, 3×128×85) (RGB) or (244, 128×85) (Greyscale). A final caveat about it is that the PCA literature recommends normalizing the data so that it has column-wise zero mean and unit variance.

The second step is to perform singular value decomposition. I consequently went shopping for an implementation. The choice pretty much determines the implementation language for this problem. Options are to use Matlab or one of its competitors (R, SciPy, Octave…) or go old-school and use C/C++ or Fortran. I also gave Swift a shot. Based on Apple’s documentation, it should possible to compute SVD with Apple’s SDK, but the implementation seems to be a mere rehashed hipster facade in front of good ol’ Fortran.

I ultimately chose Fortran and travelled back in time to the 1970’s. I am not kidding either. A portable single-file SVD implementation can be found from Alan Miller’s site. The source code lists a certain G.W. Stewart from the Argonne National Lab as the original author and mentions that the version dates back to the year 1979. The code is in the public domain. Kudos to the gentlemen for their work.

I used to program in Fortran at the university so writing a piece of code that crashes without any error messages is something I can do. However, the most difficult part of this problem was to understand conceptually the idea behind the PCA score matrix T. I wrote in the introduction that the output of PCA is a list of vectors and weight coefficients. The score matrix combines the two as it is formed from the diagonal matrix of singular values Σ and the left-singular vectors U: T=UΣ. So what?

After a certain amount of wondering, it finally dawned to me. The whole point about the score matrix is that its columns are the scaled PCA axes, and its rows are the coordinates per country in the PCA coordinate system. For example, if the bitmap for the flag of Finland is at row index n in the data matrix, the row n of the score matrix will contain the “PCA recipe” for Finland.

What is more, the score matrix can be truncated because of the way the singular values are ordered. It is sufficient to pick only a couple of the first columns. This is called rank reduction and it is the central goal of many applications of PCA. I played around with values ranging from 2 to 30 and settled with 5 in the end. For serious work, there are techniques for picking the rank intelligently based on the Eckart—Young theorem.

The loop over the countries is then a simple matter of picking a country code like FI and mapping it to an emoji 🇫🇮. This can be done based on a nifty trick I learned a while ago and now adapted to “modern” Fortran:

function country_emoji(code)

character(len=2), intent(in) :: code

integer, parameter :: ucs2 = selected_char_kind('ISO_10646')

character(kind=ucs2, len=2) :: country_emoji

integer, allocatable :: codepoints(:)

integer, parameter :: offset = 127365

integer :: i

codepoints = [( ichar(code(i:i)) + offset, i = 1, len(code) )]

country_emoji = transfer(codepoints, country_emoji)

end function

At each country, the corresponding row of the score matrix is then read and the output is as follows:

ad;🇦🇩;61;38;-26;-13;59

ae;🇦🇪;68;-41;106;46;31

af;🇦🇫;-214;76;-17;-10;-9

ag;🇦🇬;96;7;-6;-28;26

ai;🇦🇮;32;-93;-11;48;-55

...

zw;🇿🇼;46;33;-44;-28;-52

Finally, the sorting of the output can accomplished with the sort(1) command

line tool. The command sort -k3,3 -k4,4 -k5,5 -k6,6 -k7,7 -t';' -n sorts the

input numerically based on columns 3 thru 7. The sorting uses the higher order

scores if it gets stuck in a tie-break. This can be exploited to devise a simple

strategy for using the PCA scores to the fullest. If the scores are

coarse-grained to say the nearest multiple of 10, the number of tie-breaks is

increased and the sort command is encouraged to explore the higher order scores.

The resulting sort will then use information from multiple features

simultaneously at the cost of discarding finer details at each individual

feature.

Source code

The full source code is available at my GitHub. I will include the main program here for a reference:

include 'assertions.f90'

include 'utils.f90'

module image_class

use iso_fortran_env

implicit none

type :: image

character(:), allocatable :: file_name

integer(int32), allocatable :: bitmap(:,:)

end type

type :: image_matrix

type(image), allocatable :: images(:)

end type

contains

!!

!! Output shape: x(samples, image_bytes)

!! Columns : rgb_1,rgb_2,rgb_3...

!!

function matrix(self)

class(image_matrix), intent(in) :: self

real(real64), allocatable :: matrix(:,:)

integer :: i, rows, cols

integer(int32), allocatable :: bytes(:)

rows = size(self%images)

cols = 0

do i = 1, rows

cols = max(cols, size(self%images(i)%bitmap))

enddo

allocate(matrix(rows, cols), source=0.0d0)

do i = 1, rows

bytes = reshape(self%images(i)%bitmap, [cols])

matrix(i,:) = 1.0d0 * bytes

enddo

end function

end module

program main

use image_class

use dispmodule

use iso_fortran_env

use assertions

use utils

implicit none

character(len=32), allocatable :: args(:)

integer :: n, rank

real(real64), allocatable :: x(:,:)

call disp_set(sep = ', ', orient='row')

open(output_unit, encoding='utf-8')

args = cli_arguments()

n = size(args)

rank = 5

if (n == 0) then

call disp("Expected at least one argument")

stop 0

endif

call disp('Args: ', n)

call disp('Rank: ', rank)

call disp('-------------------------------------------------------------------')

call disp('RGB')

x = 1.0d0*nint(normalize(input(args)))

call output('rgb.txt', args, pca(x, rank))

call disp('-------------------------------------------------------------------')

call disp('Grayscale')

x = 1.0d0*nint(normalize(grayscale(input(args))))

call output('grayscale.txt', args, pca(x, rank))

contains

function input(arguments) result(x)

use, intrinsic :: iso_c_binding

real(real64), allocatable :: x(:,:)

character(len=*), intent(in) :: arguments(:)

integer(int32), allocatable :: bytes(:)

integer(int32) :: offset, i, file_size

type(image) :: images(size(arguments))

type(image_matrix) :: m

character(c_char), allocatable :: buffer(:)

integer, allocatable, target :: rgba(:,:)

integer, pointer :: alpha(:), rgb(:,:)

do i = 1, size(arguments)

inquire(file=arguments(i), size=file_size)

allocate(buffer(file_size))

open(10, file = arguments(i), access = 'stream', form = 'unformatted')

read(10) buffer

close(10)

! BMP image spec. Start of the bitmap array

offset = transfer(buffer(11:14), 1_int32) + 1

buffer = buffer(offset:)

! Sanity check

call assert(mod(size(buffer), 4) == 0, 'Array size mismatch')

rgba = reshape(ichar(buffer), [4, size(buffer)/4])

rgb => rgba(1:3,:)

alpha => rgba(4,:)

! Sanity check

call assert(all(alpha == 255), 'Expected full opacity')

images(i) = image(file_name=arguments(i), bitmap=rgb)

deallocate(buffer)

enddo

m = image_matrix(images)

x = matrix(m)

call disp('Data shape = ', shape(x))

end function

subroutine output(fname, args, result)

character(len=*), intent(in) :: fname

character(len=32), intent(in) :: args(:)

real(real64), intent(in), target :: result(:,:)

integer :: i

integer :: io

open(newunit=io, file=fname, status="replace", action="write", encoding='utf-8')

do i = 1, size(args)

write (io, '(AA)', advance='no') country_code(args(i)), ";"

write (io, '(AA)', advance='no') country_emoji(country_code(args(i))), " ;"

write (io, '(*(G0.5,:";"))', advance='no') 10d0*nint(0.1d0*result(i, :)) ! Coarse-grain features

write (io, '(A)', advance='yes') ''

enddo

close(io)

end subroutine

function pca(x, rank) result(t)

real(real64), allocatable, intent(in) :: x(:,:)

integer, intent(in) :: rank

real(real64), allocatable :: t(:,:)

real(real64), allocatable :: s(:), u(:,:), v(:,:)

integer :: i

call dsvd(x, s, u, v)

call disp('Left singular vectors = ', shape(u))

call disp('Sigma = ', reshape(s, [size(s)/10, 10], order=[2,1]))

allocate(t(size(u,1), size(u, 2)), source=0.0d0)

do i = 1, size(t, 2)

t(:,i) = u(:,i) * s(i)

enddo

call disp('Result shape = ', shape(t))

t = t(:, 1:rank) ! Rank reduction

call disp('Truncated result shape = ', shape(t))

end function

subroutine dsvd(x, s, u, v)

use svd, only: dsvdc

real(real64), intent(in) :: x(:,:)

real(real64), intent(out), allocatable :: s(:), u(:,:), v(:,:)

real(real64), allocatable :: e(:)

real(real64), allocatable :: xx(:,:)

integer :: n, p, job, info

! automatic allocation

xx = x

n = size(xx, 1)

p = size(xx, 2)

call disp('SVD shape = ', shape(xx))

call assert(n < p, 'Expected a matrix with shape n < p')

job = 10

allocate(s(min(n + 1, p)), source=0.0d0)

allocate(e(p), source=0.d0)

allocate(u(n, n), source=0.0d0)

call dsvdc(xx, n, p, s, e, u, v, job, info)

call assert(info == 0, 'Singular value decomposition failed.')

call assert(all(e(1:n) < epsilon(1.0_real64)), 'Singular value decomposition failed')

end subroutine

end program

Results and discussion

As promised, here are the country flags sorted according to the procedure I described in this blog post. The list is based on the values for the RGB data matrix. The raw output data table is available from rgb.txt. You may inspect and generate a similar ordering for the greyscale variant from grayscale.txt.

🇨🇾 🇦🇫 🇾🇹 🇯🇵 🇰🇷 🇧🇱 🇸🇲 🇫🇴 🇻🇮 🇱🇨 🇫🇮 🇬🇬 🇵🇼 🇭🇳 🇮🇱 🇺🇾 🇦🇶 🇬🇹 🇦🇷 🇯🇪 🇬🇪 🇬🇷 🇫🇲 🇰🇿 🇪🇪 🇻🇦 🇫🇯 🇧🇼 🇩🇯 🇹🇻 🇸🇴 🇳🇮 🇦🇼 🇺🇿 🇩🇿 🇸🇻 🇮🇪 🇸🇬 🇵🇱 🇸🇱 🇨🇩 🇳🇬 🇷🇸 🇽🇰 🇮🇸 🇷🇼 🇮🇴 🇨🇷 🇨🇮 🇲🇨 🇮🇩 🇬🇮 🇸🇪 🇬🇦 🇵🇲 🇱🇺 🇷🇪 🇧🇶 🇵🇦 🇸🇮 🇷🇺 🇧🇬 🇬🇱 🇱🇸 🇲🇬 🇮🇹 🇺🇸 🇨🇿 🇸🇰 🇲🇹 🇨🇻 🇦🇽 🇨🇺 🇺🇦 🇦🇿 🇲🇾 🇱🇷 🇲🇵 🇲🇭 🇧🇷 🇳🇫 🇸🇷 🇬🇧 🇰🇲 🇹🇭 🇫🇷 🇶🇦 🇧🇮 🇨🇱 🇹🇿 🇵🇰 🇲🇽 🇴🇲 🇵🇷 🇨🇦 🇧🇳 🇲🇴 🇨🇽 🇸🇧 🇳🇨 🇦🇸 🇬🇾 🇸🇹 🇵🇭 🇮🇷 🇧🇩 🇧🇦 🇵🇾 🇸🇿 🇪🇷 🇰🇵 🇳🇪 🇸🇨 🇳🇺 🇧🇭 🇧🇸 🇳🇱 🇳🇦 🇭🇷 🇹🇬 🇰🇮 🇭🇺 🇲🇿 🇨🇫 🇨🇬 🇱🇧 🇵🇫 🇧🇹 🇹🇲 🇩🇲 🇰🇭 🇿🇦 🇲🇺 🇹🇯 🇮🇳 🇬🇶 🇬🇫 🇿🇼 🇲🇲 🇵🇪 🇦🇺 🇨🇰 🇰🇾 🇹🇫 🇦🇮 🇫🇰 🇵🇸 🇧🇿 🇸🇽 🇩🇴 🇬🇼 🇬🇳 🇨🇲 🇲🇩 🇳🇷 🇹🇨 🇬🇸 🇸🇭 🇻🇬 🇨🇼 🇲🇸 🇳🇿 🇵🇳 🇪🇭 🇸🇸 🇹🇰 🇧🇧 🇯🇲 🇧🇫 🇲🇷 🇻🇨 🇧🇯 🇱🇰 🇲🇱 🇸🇳 🇪🇨 🇬🇺 🇯🇴 🇲🇶 🇭🇹 🇬🇭 🇰🇼 🇦🇩 🇨🇴 🇨🇨 🇸🇦 🇻🇺 🇱🇻 🇪🇹 🇲🇳 🇦🇲 🇬🇩 🇱🇹 🇻🇪 🇲🇼 🇰🇪 🇱🇾 🇿🇲 🇦🇪 🇬🇲 🇳🇴 🇩🇪 🇷🇴 🇹🇩 🇧🇪 🇦🇹 🇹🇴 🇬🇵 🇮🇶 🇸🇾 🇸🇩 🇾🇪 🇪🇬 🇰🇳 🇧🇴 🇺🇬 🇧🇲 🇩🇰 🇲🇰 🇱🇮 🇧🇾 🇦🇬 🇹🇹 🇼🇫 🇨🇭 🇪🇸 🇱🇦 🇵🇹 🇹🇼 🇲🇪 🇲🇻 🇼🇸 🇭🇰 🇹🇳 🇹🇷 🇵🇬 🇹🇱 🇮🇲 🇰🇬 🇻🇳 🇨🇳 🇦🇴 🇲🇦 🇦🇱Going over the list, one can definitely see a trend going from white to red flags. One can also see definite smaller patterns sprouting here and there. That Monaco and Indonesia follow each other is reassuring. Similarly, the countries Yemen, Egypt, Syria, Palestine and Iraq appear rightfully together.

However, it is clear that this is not the optimal ordering. A discrepancy that is immediately noticeable is the flag of Afghanistan, the second on the list. It turns out that the program used the current white Taliban flag of the country, and the country emojis have yet not been updated, perhaps in the hope that the regime would topple on its own. Another discrepancy seems to be that the various flags of the Polynesian islands resembling the flag of New Zealand/Australia do not completely cluster together. It may be because the fine features on the flags distort the PCA procedure at the selected image resolution.

That Nordic countries do not neatly align with each other is a result I can live with. Hopefully we will find unity in the imminent WW3.

Be that as it may, the principal problem I noticed while playing with this problem is that the procedure is extremely sensitive to any tweaks in the data. This is perhaps related to the observation that the singular values did not decay as rapidly as I would have wished. A second issue is that the sorting logic presented here is perhaps inferior to more advanced clustering techniques, but exploring them is beyond the scope of this blog.

In closing, this was a really fun exercise! It made me think about my memories and how human memory works in the first place. It seems that fingers have memories of their own, and the act of getting your hands dirty is the only way to become conscious of them. Even though a decade had passed since my Fortran days, my fingers still remembered it.